The 8 languages of data science

The information keeps arriving. The task of a data scientist is to organize all of those interminable pieces into a logical analysis so that data users can start searching for solutions in the sea of data. There are many excellent programming languages available for this task (data science), which is fantastic news. But is there a top choice?

Due to their frequent use in the courses, languages like R and Python command attention. No one can go wrong using them because they make excellent first picks.

Other options are available as well, and they can all do the job well. The core workflow’s foundational general-purpose languages can be expanded to handle data cleaning and filtering as well as potential analysis tasks. A good library can make a big difference.

Here is a list of some of the top data science programming languages that you should consider using for your upcoming endeavor. Multiple languages are the solution when one language is insufficient. Some data scientists are developing data pipelines with a variety of technologies at each stage, utilizing the finest aspects of each technology at its respective stage.

R

R is still a favorite among many serious data scientists because it was created for statistical analysis. Large blocks of tabular data can be handled via data structures like data frames that are built right into the R programming language. The excellent open-source libraries have addressed many of the most popular statistical and mathematical techniques that other scientists have built and released throughout time. Even some great libraries, like Sweave and knitr, are available to turn the data into polished, LaTeX-typeset reports.

R Studio, which is tailored to the work at hand, is a popular choice among data scientists for integrated development environments. Others prefer to use different development tools like Eclipse or command-line interfaces because they wish to incorporate code written in different languages that may be used to gather or pre-clean the data. Other packages can be used with R without much difficulty.

Who should use it: people with a wide range of data science and statistical analysis needs.

Python

This language was initially developed as a scripting language with a simple syntax, but it has since become one of the most popular ones in labs all over the world. Many scientists pick up Python so they can handle all of their computing needs, from data gathering to analysis.

The extensive collection of libraries related to data science is the language’s main strength. Some of the more well-known packages include NumPy, SciPy, Pandas, and Keras. Additionally, the language has been combined by researchers with frameworks for parallel programming, such as Apache Spark, to aid in processing particularly huge datasets.

When using AI to assist with data analysis, the language is also very well-liked by scientists that study artificial intelligence. In order to significantly speed up analysis, frameworks like PyTorch and TensorFlow can also benefit from specialized hardware.

Best for: beginners and people with extensive general-purpose requirements.

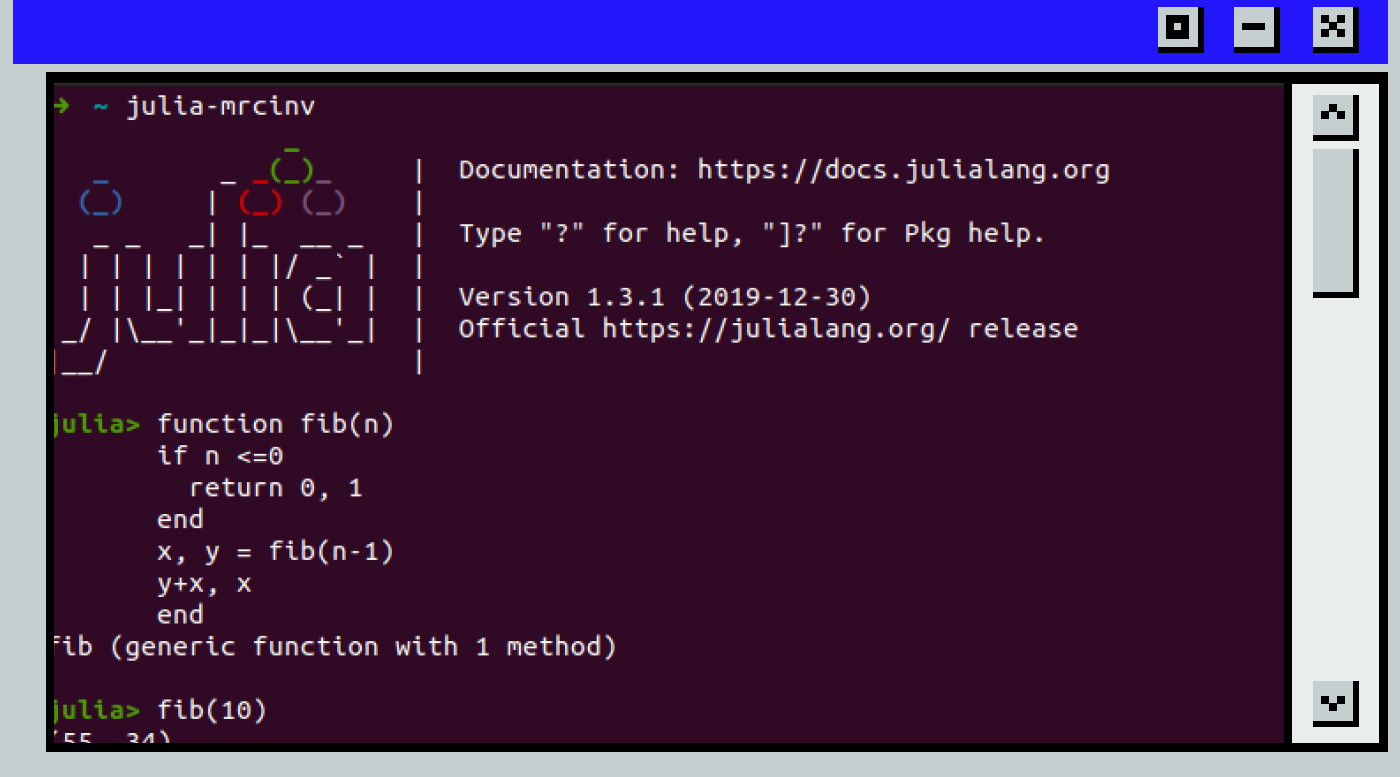

Julia

This language is a general-purpose tool for making software that performs fundamental tasks like IO. Still, over time, Julia has drawn the attention of many scientists because it is particularly effective at handling numerical tasks. It now offers a solid selection of operations for data science, machine learning, and visualization (ML). For instance, studying differential equations, Fourier transforms, and quantum physics can be done with the help of good libraries. The scientific computing field has more than 4000 different packages for various tasks.

Julia’s speed is what makes it so appealing. It’s not uncommon for scientists to discover that Julia code runs several times quicker than other languages because the compiler can target a variety of chip architectures. While this is happening, Julia programmers can interact with various integrated development environments, such as Jupyter Notebooks.

Best for: serious research and mathematical analysis.

Java

Java can be used for a variety of basic tasks, but some people use it for data science as a tool for preprocessing and cleaning up data. Because it provides more generic capabilities and libraries that can be helpful for low-level cleanup, it pairs well with languages like R. Big data processing frameworks like Hadoop and Spark, for example, are quite compatible with Java. There are several built-in classes that can quickly construct dataset summaries for some simple tasks. Good ML libraries like MLib are supported by Java as well.

Best for: general purposes, light data analysis for big data.

MATLAB

When data scientists want to apply some of these numerical techniques to assess their work, they frequently turn to MATLAB, which was initially developed to help them manage big matrices. The implementation of mathematical algorithms that use vectors, matrices, and tensors and rely on common decompositions or inversions can be straightforward.

The business MathWorks, which maintains the MATLAB proprietary software, has gradually added many capabilities to the programme over time, transforming it into a fully integrated development environment for data science. Libraries support every significant statistical technique, AI procedure, and machine learning algorithm. Additionally, data visualizations can be created from the results using graphic software.

Most suitable for: hard sciences that rely on matrix and vector analysis.

COBOL

The original business computing language continues to be a strong foundation for data science. The language was created to collect and process business data, and it has libraries that support many of the traditional statistical procedures. There are many COBOL-written software stacks currently in use by large corporations; frequently, adding a few new COBOL routines is the most straightforward method to incorporate data science into them.

Best for: enterprise data analytics and well-established code bases.

SPSS

The acronym SPSS, which stood for Statistical Package for the Social Sciences when it was initially introduced in 1968, was modified to Statistical Product and Service Solutions as the business grew. The SPSS software suite is currently owned and maintained by IBM and is a component of IBM’s vast portfolio of software solutions that businesses can use to provide data science.

Pulldown menus and an integrated environment allow for the immediate completion of a large portion of SPSS work without the need for extensive scripting. If that isn’t sufficient, extending the fundamental routines is simple with a macro language. Some of these methods are now able to be written in R or Python, thanks to recent developments. Recently, SPSS version 29 was launched with more tools for time-series analysis and linear regression.

Ideal for: traditional statistics and data interpretation.

Mathematica

Some mathematicians regard Mathematica as one of the most astonishing pieces of software ever made because it can solve some of the trickiest mathematical puzzles. Data scientists do not generally require vast features and libraries. The possibilities are endless for anyone who wants to investigate more advanced methods, the foundations are strong, and the graphics are excellent.

Best for: complex experiments and data scientists who appreciate mathematics most.

A hybrid strategy

Although each of these languages has its supporters and strong markets in which it excels, data scientists frequently combine code from multiple different languages in a pipeline. They might start by using a general-purpose language like COBOL for the majority of the preprocessing and filtering before switching to a language with a strong statistical foundation like R for some analysis. In the end, they might opt to use a different language for data visualization if it provides a particular style of graph they enjoy.

Each action makes use of the language’s strongest points. You are not required to pick only one.

Teams with complex workloads or several sources and destinations are those who win on a long term.